Facebook avoided some of the toughest inquiries from reporters yesterday during a conference call about its efforts to fight election interference and fake news. The company did provide additional transparency on important topics by subjecting itself to intense questioning from a gaggle of its most vocal critics. A few bits of interesting news did emerge:

- Facebook’s fact-checking partnerships now extend to 17 countries, up from 14 last month.

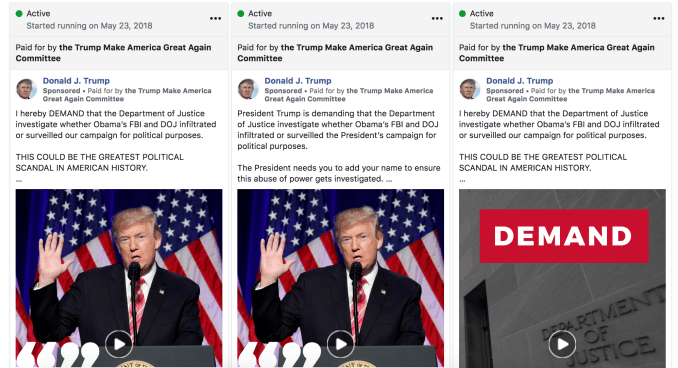

- Top searches in its new political ads archive include California, Clinton, Elizabeth Warren, Florida, Kavanaugh, North Carolina, and Trump; and its API for researchers will open in August

- To give political advertisers a quicker path through its new verification system, Facebook is considering a preliminary location check that would later expire unless they verify their physical mailing address.

Yet deeper questions went unanswered. Will it be transparent about downranking accounts that spread false news? Does it know if the midterm elections are already being attacked? Are politically divisive ads cheaper?

UNITED STATES – APRIL 11: Facebook CEO Mark Zuckerberg prepares to testify before a House Energy and Commerce Committee in Rayburn Building on the protection of user data on April 11, 2018. (Photo By Tom Williams/CQ Roll Call) // Flickr CC Sean P. Anderson

Here’s a selection of the most important snippets from the call, followed by a discussion of how it evaded some critical topics.

Fresh facts and perspectives

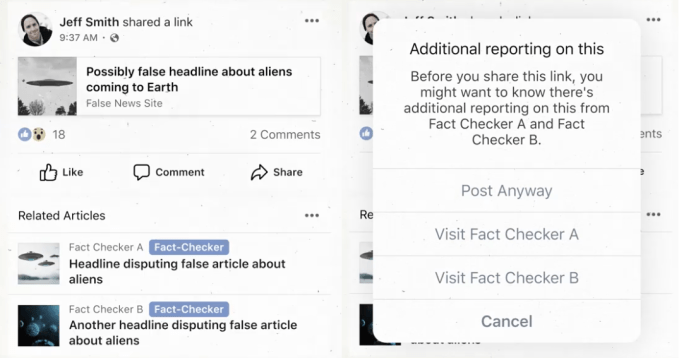

On Facebook’s approach of downranking instead of deleting fake news:

Tessa Lyons, Product Manager for the News Feed: “If you are who you say you are and you’re not violating our Community Standards, we don’t believe we should stop you from posting on Facebook. This approach means that there will be information posted on Facebook that is false and that many people, myself included, find offensive . . . Just because something is allowed to be on Facebook doesn’t mean it should get distribution . . . We know people don’t want to see false information at the top of their News Feed and we believe we have a responsibility to prevent false information from getting broad distribution. This is why our efforts to fight disinformation are focused on reducing its spread.

–When we take action to reduce the distribution of misinformation in News Feed, what we’re doing changing the signals and predictions that inform the relevance score for each piece of content. Now, what that means is that information, that content appears lower in everyone’s News Feed who might see it, and so fewer people will actually end up encountering it.

Image: Bryce Durbin/TechCrunch

–Now, the reason that we strike that balance is because we believe we are working to strike the balance between expression and the safety of our community.

–If a piece of content or an account violates our Community Standards, it’s removed; if a Page repeatedly violates those standards, the Page is removed. On the side of misinformation — not Community Standards — if an individual piece of content is rated false, its distribution is reduced; if a Page or domain repeatedly shares false information, the entire distribution of that Page or domain is reduced.”

On how Facebook disrupts misinformation operations targeting elections:

Nathaniel Gleicher, Head of Cybersecurity Policy: “For each investigation, we identify particular behaviors that are common across threat actors. And then we work with our product and engineering colleagues as well as everyone else on this call to automate detection of these behaviors and even modify our products to make those behaviors much more difficult. If manual investigations are like looking for a needle in a haystack, our automated work is like shrinking that haystack. It reduces the noise in the search environment which directly stops unsophisticated threats. And it also makes it easier for our manual investigators to corner the more sophisticated bad actors.

In turn, those investigations keep turning up new behaviors which fuels our automated detection and product innovation. Our goal is to create this virtuous circle where we use manual investigations to disrupt sophisticated threats and continually improve our automation and products based on the insights from those investigations. Look for the needle and shrink the haystack.”

TechCrunch/Bryce Durbin

On reactions to political ads labeling, improving the labeling process, and the ads archive:

Rob Leathern, Product Manager for Ads: “On the revenue question, the political ads aren’t a large part of our business from a revenue perspective but we do think it’s very important to be giving people tools so they can understand how these ads are being used

-I do think we have definitely seen some folks have some indigestion about the process of getting authorized. We obviously think it’s an important tradeoff and it’s the right tradeoff to make. We’re definitely exploring ways to reduce the time for them from starting the authorization process to being able to place an ad. We’re considering a preliminary location check that might expire after a certain amount of time, which would then become permanent once they verify their physical mailing address and receive the letter that we send to them.

–We’re actively exploring ways to streamline the authorization process and are clarifying our policy by providing examples on what ad copy would require authorization and a label and what would not.

–We also plan to add more information to the Info and Ads tab for Pages. Today you can see when the Page was created, previous Page names, but over time we hope to add more context for people there in addition to the ads that that Page may have run as well.”

Dodged questions

On transparency about downranking accounts

Facebook has been repeatedly asked to clarify the lines it draws around content moderation. It’s arrived at a controversial policy where content is allowed even if it spreads fake news, gets downranked in News Feed if fact checkers verify the information is false, and gets deleted if it incites violence or harasses other users. Repeat offenders in the second two categories can get their whole profile, Page, or Group downranked or deleted.

But that surfaces secondary questions about how transparent it is about these decisions and their impacts on the reach of false news. Hannah Kuchler of The Financial Times and Sheera Frenkel of The New York Times pushed Facebook on this topic. Specifically, the latter asked “I was wondering if you have any intention going forward to be transparent about who is going — who is down-ranked and are you keeping track of the effect that down-ranking a Page or a person in the News Feed has and do you have those kinds of internal metrics? And then is that also something that you’ll eventually make public?”

Facebook has said that if a post is fact-checked as false, it’s downranked and loses 80% of its future views through News Feed. But that ignores the fact that it can take three days for fact checkers to get to some fake news stories, so they’ve likely already received the majority of their distribution. It’s yet to explain how a false rating from fact checkers reduces the story’s total views before and after the decision, or what the ongoing reach reduction is for accounts that are downranked as a whole for repeatedly sharing false rated news.

Lyons only answered regarding what happens to individual posts rather than providing the requested information about the impact on downranked accounts:

Lyons: “If you’re asking specifically will we be transparent about the impact of fact-checking on demotions, we are already transparent about the rating that fact-checkers provide . . . In terms of how we notify Pages when they share information that’s false, any time any Page or individual shares a link that has been rated false by fact-checkers, if we already have a false rating we warn them before they share, and if we get a false rating after they share, we send them a notification. We are constantly transparent, particularly with Page admins, but also with anybody who shares information about the way in which fact-checkers have evaluated their content.”

On whether politically divisive ads are cheaper and more effective

A persistent question about Facebook’s ads auction is if it preferences inflammatory political ads over neutral ones. The auction system is designed to prioritize more engaging ads because they’re less likely to push users off the social network than boring ads, thereby reducing future ad views. The concern is that Facebook may be incentivizing political candidates and bad actors trying to interfere with elections to polarize society by making ads that stoke divisions more efficient.

Deepa Seetharaman of the The Wall Street Journal surfaced this on the call saying “I’m talking to a lot of campaign strategists coming up to the 2018 election. One theme that I continuously hear is that the more incendiary ads, do better but the effective CPMs on those particular ads are lower than, I guess, neutral or more positive messaging. Is that a dynamic that you guys are comfortable with? And is there anything that you’re doing to kind of change the kind of ads that succeeds through the Facebook ad auction system?”

Facebook’s Leathern used a similar defense Facebook has relied on to challenge questions about whether Donald Trump got cheaper ad rates during the 2016 election, claiming it was too hard to assess that given all the factors that go into determining ad prices and reach. Meanwhile, he ignored whether, regardless of the data, Facebook wanted to make changes to ensure divisive ads didn’t get preference.

Leathern: “Look, I think that it’s difficult to take a very specific slice of a single ad and use it to draw a broad inference which is one of the reasons why we think it’s important in the spirit of the transparency here to continue to offer additional transparency and give academics, journalists, experts, the ability to analyze this data across a whole bunch of ads. That’s why we’re launching the API and we’re going to be starting to test it next month. We do believe it’s important to give people the ability to take a look at this data more broadly. That, I think, is the key here — the transparency and understanding of this when seen broadly will give us a fuller picture of what is going on.”

On if there’s evidence of midterm elections interference

Facebook failed to adequately protect the 2016 US presidential election from Russian interference. Since then it’s taken a lot of steps to try to safeguard its social network, from hiring more moderators to political advertiser verification systems to artificial intelligence for fighting fake news and the fake accounts that share it.

Internal debates about approaches to the issue and a reorganization of Facebook’s security teams contributed Facebook CSO Alex Stamos’ decision to leave the company next month. Yesterday, BuzzFeed’s Ryan Mac and Charlie Warzel published an internal memo by Stamos from March urging Facebook to change. “We need to build a user experience that conveys honesty and respect, not one optimized to get people to click yes to giving us more access . . . We need to listen to people (including internally) when they tell us a feature is creepy or point out a negative impact we are having in the world.” And today, Facebook’s Chief Legal Officer Colin Stretch announced his departure.

Facebook efforts to stop interference aren’t likely to have completely deterred those seeking to sway or discredit our elections, though. Evidence of Facebook-based attacks on the midterms could fuel calls for government regulation, investments in counter-cyberwarfare, and Robert Mueller’s investigation into Russia’s role.

WASHINGTON, DC – APRIL 11: Facebook co-founder, Chairman and CEO Mark Zuckerberg prepares to testify before the House Energy and Commerce Committee in the Rayburn House Office Building on Capitol Hill April 11, 2018 in Washington, DC. This is the second day of testimony before Congress by Zuckerberg, 33, after it was reported that 87 million Facebook users had their personal information harvested by Cambridge Analytica, a British political consulting firm linked to the Trump campaign. (Photo by Chip Somodevilla/Getty Images)

David McCabe of Axios and Cecilia Kang of The New York Times pushed Facebook to be clear about whether it had already found evidence of interference into the midterms. But Facebook’s Gleicher refused to specify. While it’s reasonable that he didn’t want to jeopardize Facebook or Mueller’s investigation, it’s something that Facebook should at least ask the government if it can disclose.

Gleicher: “When we find things and as we find things — and we expect that we will — we’re going to notify law enforcement and we’re going to notify the public where we can . . . And one of the things we have to be really careful with here is that as we think about how we answer these questions, we need to be careful that we aren’t compromising investigations that we might be running or investigations the government might be running.”

The answers we need

So Facebook, what’s the impact of a false rating from fact checkers on a story’s total views before and after its checked? Will you reveal when whole accounts are downranked and what the impact is on their future reach? Do politically incendiary ads that further polarize society cost less and perform better than politically neutral ads, and if so, will Facebook do anything to change that? And does Facebook already have evidence that the Russians or anyone else are interfering with the U.S. midterm elections?

We’ll see if any of the analysts who get to ask questions on today’s Facebook earnings call will step up.

from Social – TechCrunch https://ift.tt/2Oitd7B Dodged questions from Facebook’s press call on misinformation Josh Constine https://ift.tt/2JT7QGN

via IFTTT

0 comments

Post a Comment