People who rely on gaze-tracking to interact with their devices on an everyday basis now have a powerful new tool in their arsenal: Google Assistant. Substituting gaze for its original voice-based interface, the Assistant’s multiple integrations and communication tools should improve the capabilities of the Tobii Dynavox devices it now works on.

Assistant will now be possible to add as a tile on Tobii’s eye-tracking tablets and mobile apps, which present a large customizable grid of commonly used items that the user can look at to activate. It acts as an intermediary with a large collection of other software and hardware interfaces that Google supports.

For instance smart home appliances — which can be incredibly useful for people with certain disabilities — may not have an easily accessible interface for the gaze-tracking device, necessitating other means or perhaps limiting what actions a user can take. Google Assistant works with tons of that stuff out of the box.

“Being able to control the things around you and ‘the world’ is central to many of our users,” said Tobii Dynavox’s CEO, Fredrik Ruben. “The Google assistant ecosystem provides almost endless possibilities – and provides a lot of normalcy to our community of users.”

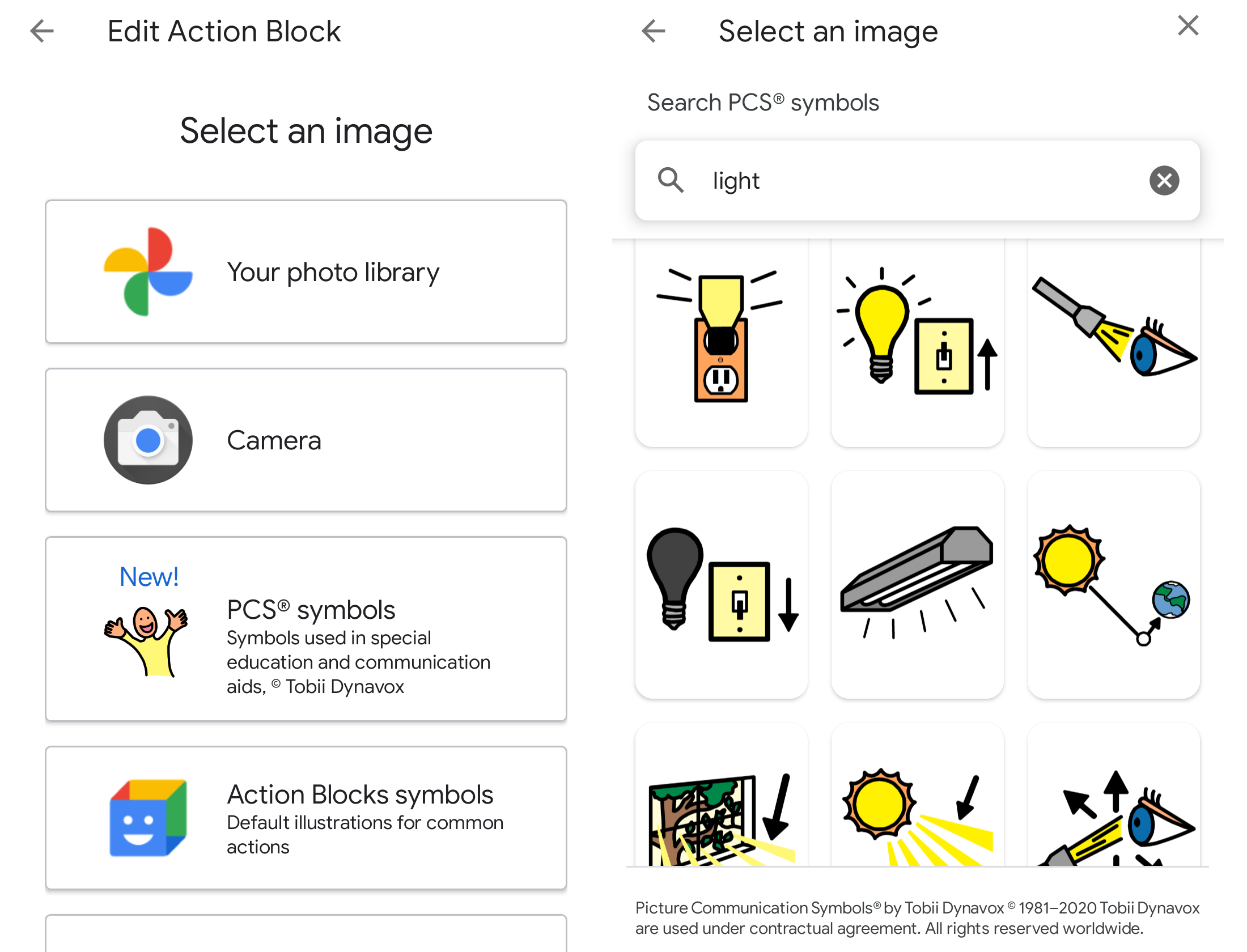

Users will be able to set up Assistant tiles for commands or apps, and automate inquiries like “what’s on my calendar today?” The setup process just requires a Google account, and then the gaze-tracking device (in this case Tobii Dynavox’s mystifyingly named Snap Core First app) has to be added to the Google Home app as a smart speaker/display. Then Assistant tiles can be added to the interface and customized with whatever commands would ordinarily be spoken.

Ruben said the integration of Google’s software was “technically straightforward.” “Because our software itself is already built to support a wide variety of access needs and is set up to accommodate launching third-party services, there was a natural fit between our software and Google Assistant’s services,” he explained.

Tobii’s built-in library of icons (things like lights with an up arrow, a door being opened or closed, and other visual representations of actions) can also be applied easily to the Assistant shortcuts.

For Google’s part, this is just the latest in a series of interesting accessibility services the company has developed, including live transcription, detection when sign language is being used in group video calls, and speech recognition that accommodates non-standard voices and people with impediments. Much of the web is not remotely accessible but at least the major tech companies put in some good work now and then to help.

0 comments

Post a Comment