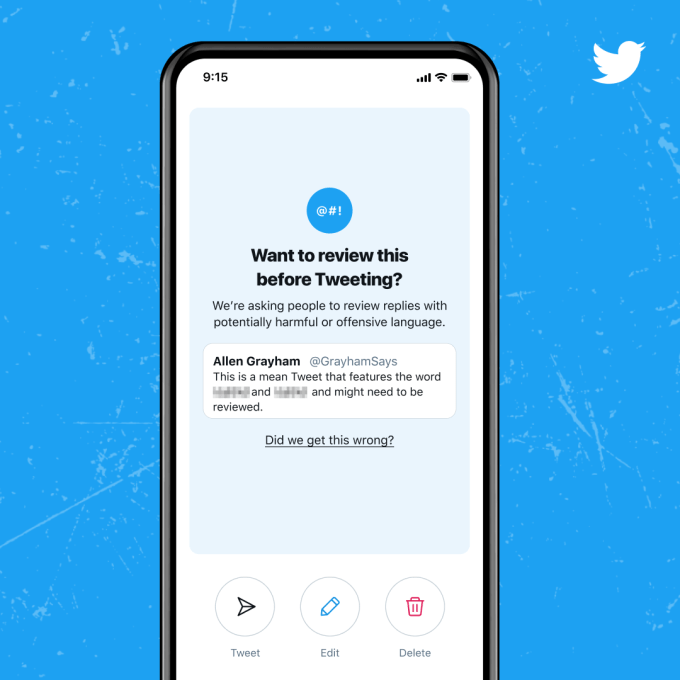

A year ago, Twitter began testing a feature that would prompt users to pause and reconsider before they replied to a tweet using “harmful” language — meaning language that was abusive, trolling, or otherwise offensive in nature. Today, the company says it’s rolling improved versions of these prompts to English-language users on iOS and soon, Android, after adjusting its systems that determine when to send the reminders to better understand when the language being used in the reply is actually harmful.

The idea behind these forced slow downs, or nudges, are about leveraging psychological tricks in order to help people make better decisions about what they post. Studies have indicated that introducing a nudge like this can lead people to edit and cancel posts they would have otherwise regretted.

Twitter’s own tests found that to be true, too. It said that 34% of people revised their initial reply after seeing the prompt, or chose not to send the reply at all. And, after being prompted once, people then composed 11% fewer offensive replies in the future, on average. That indicates that the prompt, for some small group at least, had a lasting impact on user behavior. (Twitter also found that users who were prompted were less likely to receive harmful replies back, but didn’t further quantify this metric.)

Image Credits: Twitter

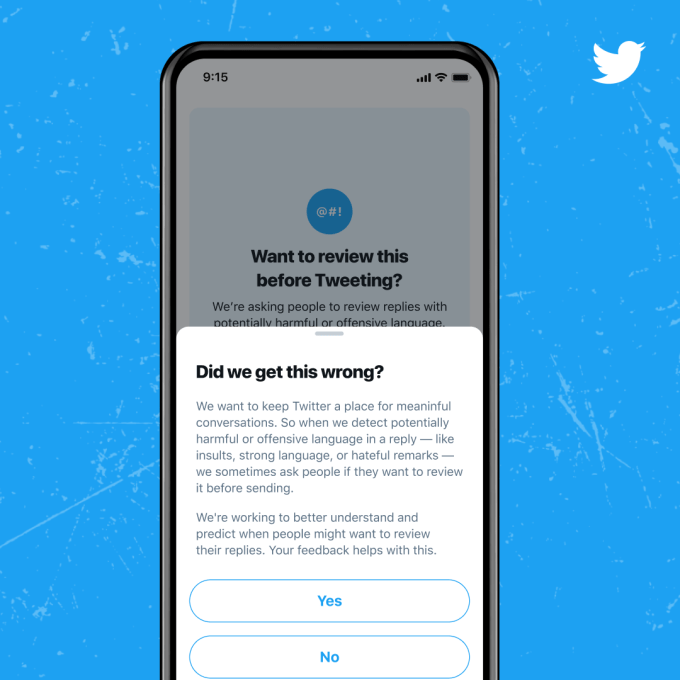

However, Twitter’s early tests ran into some problems. it found its systems and algorithms sometimes struggled to understand the nuance that occurs in many conversations. For example, it couldn’t always differentiate between offensive replies and sarcasm or, sometimes, even friendly banter. It also struggled to account for those situations in which language is being reclaimed by underrepresented communities, and then used in non-harmful ways.

The improvements rolling out starting today aim to address these problems. Twitter says it’s made adjustments to the technology across these areas, and others. Now, it will take the relationship between the author and replier into consideration. That is, if both follow and reply to each other often, it’s more likely they have a better understanding of the preferred tone of communication than someone else who doesn’t.

Twitter says it has also improved the technology to more accurately detect strong language, including profanity.

And it’s made it easier for those who see the prompts to let Twitter know if the prompt was helpful or relevant — data that can help to improve the systems further.

How well this all works remains to be seen, of course.

Image Credits: Twitter

While any feature that can help dial down some of the toxicity on Twitter may be useful, this only addresses one aspect of the larger problem — people who get into heated exchanges that they could later regret. There are other issues across Twitter regarding abusive and toxic content that this solution alone can’t address.

These “reply prompts” aren’t the only time Twitter has used the concept of nudges to impact user behavior. It also reminds users to read an article before you retweet and amplify it in an effort to promote more informed discussions on its platform.

Twitter says the improved prompts are rolling out to all English-language users on iOS starting today, and will reach Android over the next few days.

from Social – TechCrunch https://ift.tt/3xQjtYX Twitter rolls out improved ‘reply prompts’ to cut down on harmful tweets Sarah Perez https://ift.tt/3h7Mh9w

via IFTTT

0 comments

Post a Comment